AI is revolutionizing how we search, create, and engage with information in India and worldwide. However, not everything AI produces is accurate or reliable. At Ideas To Reach, we emphasize the importance of understanding AI misinformation in India, recognizing AI’s limitations, and navigating this rapidly evolving technology responsibly.

As advanced AI tools like generative AI and large language models (LLMs), including Google Gemini and ChatGPT, become mainstream, challenges around AI accuracy issues and ethical concerns grow. It’s crucial to be aware of the risks involved in blindly trusting AI-generated content and to approach AI with informed caution for better results.

India’s digital ecosystem is expanding rapidly. With the rise of AI-powered search engines and content creation tools, users increasingly depend on these technologies for education, business decisions, and daily queries. Yet, AI misinformation in India is an emerging problem. Many AI models produce outputs based on patterns in training data rather than verified facts. When the data is incomplete, outdated, or biased, which is common in a diverse country like India, AI often generates inaccurate responses.

These inaccuracies are widely known as AI hallucinations. When we talk about AI hallucinations explained, it means generative AI systems can fabricate confident-sounding but wrong content. This risks spreading misinformation and eroding trust in AI-powered tools, especially if Indian users do not verify the information.

Understanding AI hallucination patterns is critical for Indian users, where language diversity and limited data quality amplify these issues. This is why responsible AI usage and fact-checking are central to combating AI-generated misinformation. For anyone looking to deepen their understanding of how AI impacts search, our blog on how to rank on Google with AI overviews and AI search offers valuable insights into AI’s evolving role.

AI systems such as those behind Google’s Search Generative Experience (SGE) and ChatGPT rely heavily on machine learning models trained on vast datasets. These models learn to predict text sequences but do not “understand” content as humans do. Consequently, they can produce plausible but false outputs.

AI accuracy issues arise from bias in algorithms or gaps in available data. If the AI’s training data lacks certain facts or presents outdated information, the output becomes unreliable. This explains why Indian users often ask: “Why does AI give wrong information?” and “Is AI reliable for decision-making in 2025?”

At Ideas To Reach, we emphasize that AI should be seen as a powerful assistant, not an infallible oracle. Proper prompt engineering can reduce errors, but human review and additional validation remain necessary. For further detail on the balance between AI’s strengths and limitations, our post on AI poisoning in SEO covers how AI can sometimes mislead if not managed correctly.

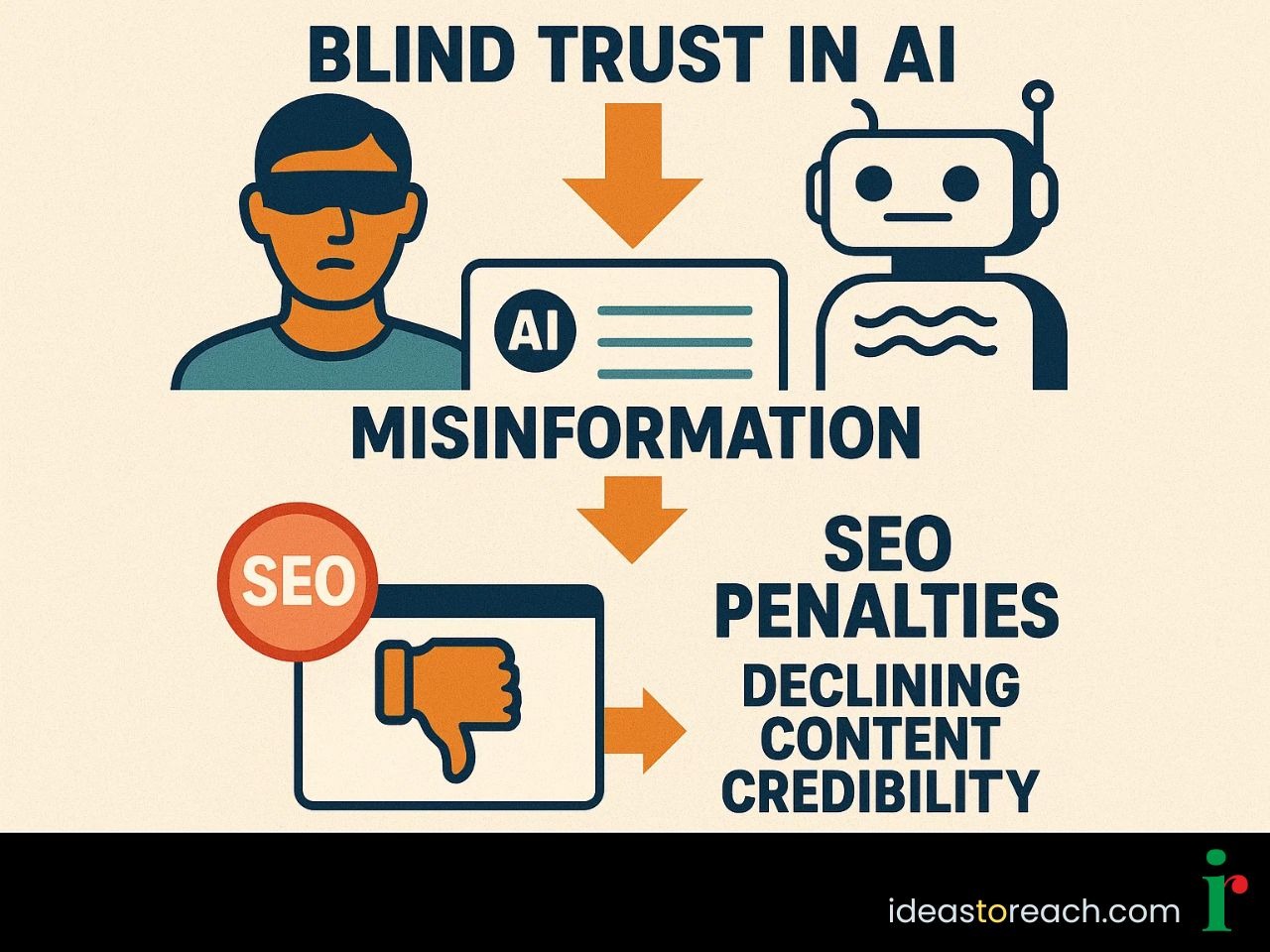

Relying unquestioningly on AI tools brings several risks. AI misinformation can cause poor decision-making and reputational damage. In SEO, AI-generated misinformation may result in content penalties from Google, especially under evolving algorithms such as the Google BlockRank AI ranking algorithm, which evaluates content authenticity more stringently.

Google’s search algorithm updates increasingly reward trustworthy, authoritative, and accurate content that meets E-E-A-T standards (Expertise, Experience, Authoritativeness, and Trustworthiness). Low-quality AI-generated information can hurt search rankings and user engagement.

Moreover, ethical concerns in AI, such as bias, privacy, and transparency, are amplified when users place full confidence in AI outputs without scrutiny. In India’s diverse internet environment, educating users on spotting AI misinformation is critical. You can also learn how structured data plays a role in securing better results in AI-powered search by visiting our article on why structured data is the key to winning in AI search.

AI hallucination means AI sometimes “hallucinates” facts, invents data, or distorts reality. This can confuse users, especially in high-stakes areas like education, government information, and business communication. For example, ChatGPT accuracy concerns show up when the model generates incorrect details about Indian regulations or health advice, which users might trust unknowingly.

Indian users should learn how to spot AI misinformation. This means not taking AI outputs at face value and always verifying information using trusted sources. In fact, integrating AI responsibly, such as adopting the new Chrome AI mode for browsing, can also help users differentiate true facts from hallucinated content, which we explore in detail on our blog about Chrome AI mode.

While AI tools have improved, their reliability for complex decision-making still has limitations. AI can assist by providing data analysis and content suggestions, but nuanced judgment requires human expertise, especially in India, where the digital information ecosystem is complex and varied.

At Ideas To Reach, we advocate balanced AI adoption. By combining AI with SEO best practices, expert human oversight, and prompt engineering, businesses can avoid pitfalls while gaining AI’s efficiency benefits. For an overview of how AI will affect SEO in the years ahead, you might find our post on will Google AI replace SEO? helpful.

Several real-life instances in India highlight AI limitations. Automated chatbots have given incorrect or misleading information on government programs. Likewise, AI-generated SEO content riddled with errors or outdated facts can lower search visibility. These issues are exacerbated by AI poisoning in SEO, where faulty AI-generated content leads to ranking drops.

Business owners and SEO professionals must remain vigilant to such risks. Google’s evolving algorithms prioritize authentic, valuable content, making continuous human review essential. Strategies for maintaining content quality amid AI-generated text are discussed comprehensively in our blog about AI poisoning in SEO.

Indian users and content creators can protect themselves by following these steps:

Cross-check AI-generated content against credible sources such as government portals and established news websites.

Use digital fact-checking tools specifically designed to detect misinformation and biased data.

Stay informed about AI governance in India, which enforces ethical AI standards.

Incorporate human editorial review before publishing AI-generated material.

We encourage Indian SEOs and digital marketers to follow these prudent practices to avoid the dangers of blindly trusting AI tools. These steps align well with Google’s Search Quality Evaluator Guidelines requiring trust and accuracy in content.

Ethical concerns in AI focus on mitigating bias, protecting privacy, and ensuring transparency. Deepfake content and digital misinformation threaten online information credibility. AI governance in India emphasizes these concerns to promote trustworthy AI use.

At Ideas To Reach, we uphold AI ethics & safety and strive to educate about responsible AI adoption. For ways to enhance your SEO strategy while staying aligned with AI ethics, see our detailed analysis of why mobile speed matters for SEO as an important factor for AI-powered search experiences.

Navigating AI misinformation in India demands both awareness and careful vigilance. Understanding AI’s strengths alongside its limitations allows us to use this powerful technology effectively while maintaining accuracy and trustworthiness. Relying solely on AI without human oversight can lead to mistakes, but when combined with expert judgment, AI becomes an invaluable tool for digital marketing and SEO. At Ideas To Reach, we are committed to guiding businesses and individuals through the complexities of AI, offering expert insights and best practices for responsible AI adoption in India’s evolving digital landscape.

AI is not always accurate because it relies on patterns in training data, not real-time fact-checking. When the data is incomplete, outdated, or biased, AI models generate wrong information, which is often called hallucinations. This is common in generative AI tools used in India and globally.

AI hallucination happens when an AI confidently produces false or misleading information. This creates trust issues, spreads misinformation, and can lead to wrong decisions in education, business, and content creation.

Indian users should cross-check AI outputs with reliable sources, fact-checking websites, and authoritative platforms like government portals and news sites. Avoid relying solely on a single AI-generated answer.

Yes. AI misinformation can hurt SEO by causing inaccuracies, outdated data, or duplicate content. Google’s E-E-A-T standards prioritize trustworthy, accurate content, making it vital to fact-check AI-generated information.

Blindly trusting AI tools may lead to poor decisions, false data use, and sharing misinformation. AI should assist, not replace, human judgment. A critical human review process is essential for all AI-generated content.